SQL Server pretty much works out of the box – go through the

install wizard, click Next, Next, Next… create a database and off you go.

However, that doesn't mean that everything is taken care of and no further

changes are required.

There are some changes that I like to make to the vanilla

installation – some of these are personal preference but I think all of them

afford benefits. As I work for a project team I often have to install SQL

Server on new hardware. I follow through the list below to ensure I have covered

everything.

I have a selection of SQL jobs I like to set up and some

server configuration options. I won’t be dealing with hardware aspects or RAID

configuration but with changes to SQL Server software configuration itself,

however, I may write a post about those in the future.

So here it is:

Model Database

Change Model

DB to SIMPLE Recovery Model and that files are set to grow by megabytes and not

percent - so any future databases created inherit these properties.

Configure database

mail

Some of the scripts

below rely on an Operator to be configured to send alerts to so we will need to

configure Database Mail. This is out of scope for this article but details can

be found here.

Configure

SQL Agent job notification emails

After you have

configured Database Mail you need to configure the SQL Agent to use it. Follow

these steps:

- Right Click on SQL Server Agent, Select properties.

- Select Alert System.

- Select Enable mail profile.

- Select your mail profile

- Select OK

- Restart SQL Agent service

SQL jobs

This is a list of jobs I create by default on every new

server:

- MaintenanceSolution By Ola Hallengren - includes backup and some maintenance jobs. Only the jobs are created, you will need to create the schedules manually. You may possibly want to make changes to the steps such as adding compression to the backup one.

- Weekly Cycle of Error Logs by Paul Hewson – prevents the error log from bloating by cycling it every week (this script includes the schedule)

- SP_WhoIsActive by Adam Machanic – useful diagnostic query

- dbWarden by Stevie Rounds and Michael Rounds – daily health report emailed to your inbox each day.

- sp_blitz by Brent Ozar – check that everything is configured correctly.

- Monitor File Growth by Paul Hewson – useful for keeping a record of the growth of data and log files. This can be queried at a later time by using SSRS or Excel.

- Agent Alerts Management Pack by Tibor Karaszi

When you have run all the scripts above it could be worth running the following script so that we log all SQL Agent job history we can:

Enable additional logging for SQL Agent jobs by Paul Hewson

Enable additional logging for SQL Agent jobs by Paul Hewson

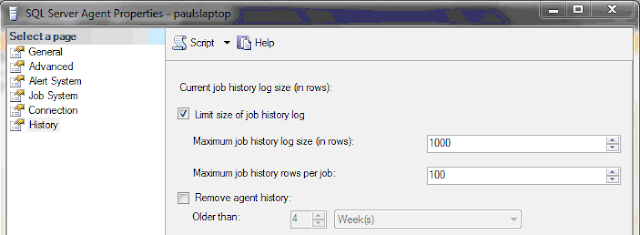

SQL Agent History

I find that the default number of records recorded for SQL

Agent job history at 1000\100 is usually not enough so I like to increase this

value by several orders of magnitude by using this command.

USE [msdb]

GO

EXEC msdb.dbo.sp_set_sqlagent_properties @jobhistory_max_rows=100000,

@jobhistory_max_rows_per_job=10000

GO

NB, this will increase the

size of the msdb slightly so another reason to keep it off the C: drive.

Configure

TempDB with additional files if required

This a little contentious but if I know the database is

going to be used quite heavily with several large databases installed I will

move TempDB to its own LUN/drive and split it into several separate but equally

sized files.

Manually alter the default

database to be 1024MB then add additional files with a script similar to this:

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev2, FILENAME = 'F:\MSSQL\Data\tempdb2.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev3, FILENAME = 'F:\MSSQL\Data\tempdb3.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev4, FILENAME = 'F:\MSSQL\Data\tempdb4.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev5, FILENAME = 'F:\MSSQL\Data\tempdb5.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev6, FILENAME = 'F:\MSSQL\Data\tempdb6.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev7, FILENAME = 'F:\MSSQL\Data\tempdb7.mdf',

SIZE = 1024);

ALTER DATABASE tempdb

ADD FILE (NAME = tempdev8, FILENAME = 'F:\MSSQL\Data\tempdb8.mdf',

SIZE = 1024);

Change memory

settings

This is pretty much an essential change to make as the

default is that SQL Server will use all of the available memory on the server

which may cause problems. I use the details from this post by Glenn Berry to

determine the values:

Configure the Trace Flags

By default SQL captures only limited details on deadlocks

when they occur so I enable trace 1222 for more verbose logging. So this trace

is enabled every time the server starts I alter the properties of the SQL

Server service as below. I also think it is convenient to know when Auto Update

Statistics events have occurred so I enable trace 8721. The screen shot below is

taken from SQL 2008 using SQL Server Configuration Manager:

|

| Click to enlarge |

Check Configuration

After you have done all the above you could run sp_blitz against the server to see if you have missed anything.

That's it! I may amend this posting from time to time but generally if you follow the steps above you should have a well configured and stable server. If you can think of anything else, please let me know.

That's it! I may amend this posting from time to time but generally if you follow the steps above you should have a well configured and stable server. If you can think of anything else, please let me know.

Comments

Post a Comment